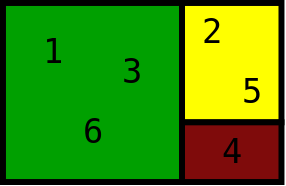

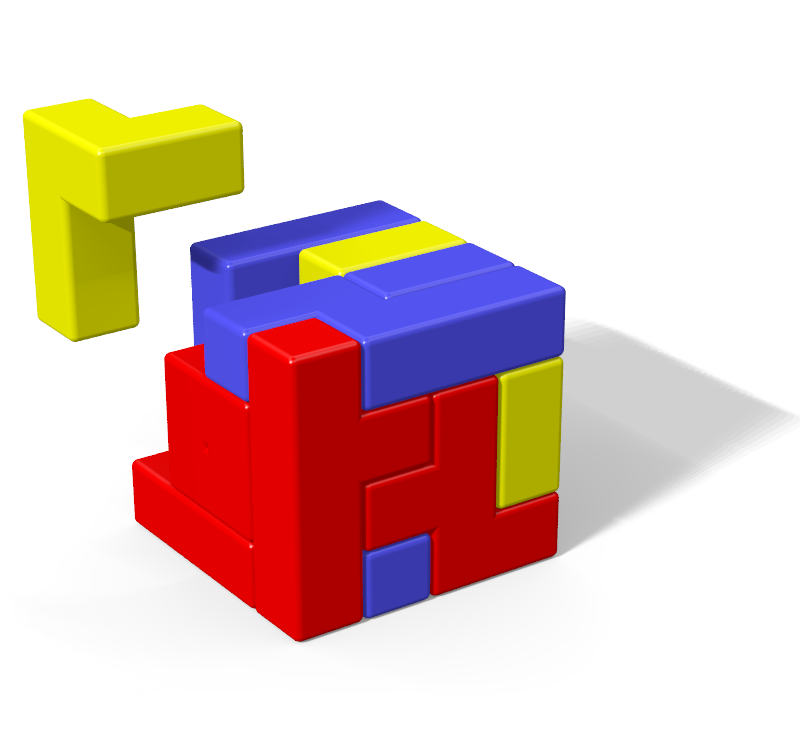

I've designed a new backtrack algorithm for solving tiling problems that I call fixed image list algorithm (FILA). The algorithm is flexible in that it supports various ordering heuristics.1 By using a heuristic that always selects the first open cell, FILA behaves like Fletcher and de Bruijn's algorithms.2,3 If you use a heuristic that always picks the cell that has fewest fit options, it behaves like my most constrained hole (MCH) algorithm.4 A key distinguishing feature of FILA is that ordering heuristics return neither a target cell to fill, nor a target piece to place, but rather return a set of image lists that should be tried (where an image is defined as a particular translation of a particular rotation of a puzzle piece). The returned set contains one list of images for each uniquely shaped puzzle piece. Although the ordering heuristics that target cells are best suited to FILA, the interface does allow heuristics to target pieces, and is a subject for additional research.

This interface allows the heuristic to select and return a precalculated set of image lists that is customized in three different ways to radically reduce the size of the lists by eliminating most images that cannot possibly fit. First, because different lists are calculated for each cell, only images bounded by the puzzle walls are included in the lists. Second, some heuristics (like that used by Fletcher's algorithm) guarantee that cells are filled in a particular order. For such fixed selection order heuristics, FILA identifies this order during initialization and, through a procedure I call priority occupancy filtering (POF), only includes images in a list for a cell that do not conflict with cells that must already be filled. Third, a technique I call neighbor occupancy filtering (NOF) (which is similar to a technique Gerard Putter described to me in a 2011 e-mail conversation) precalculates a different set of image lists for each possible occupancy state of the adjacent neighbors of each target cell. For a 3D polycube puzzle, there are up to six adjacent neighbors (in the $-x$, $-y$, $-z$, $+x$, $+y$, and $+z$ directions), and so up to 64 different sets of image lists are precalculated for each puzzle cell. Later, when a cell is selected by the heuristic, the current occupancy state of those adjacent neighbors is determined, and the set of image lists corresponding to that compound state is returned, guaranteeing no image conflicts with those neighboring cells. In this way, the number of images that must be tried by FILA at each recursive step is radically reduced, improving algorithm efficiency relative to other algorithms that make no such optimization, but without the expense of continuous list maintenance as is required by Donald Knuth's DLX algorithm.

Version 2.0 of my polycube puzzle solver only includes the DLX and FILA algorithms, but the retired algorithms (de Bruijn, EMCH, and MCH) can all be recreated with FILA by using the f (first), e (estimate), and s (size) heuristics respectively. In addition all of the other implemented heuristics, previously only available to DLX, may now also be used with FILA. Despite the additional abstraction, the new FILA algorithm (even with the new NOF optimization disabled) has improved puzzle solve times (I've seen from 10% to 35%). Enabling NOF (by simply adding -n to the command line) consistently provides additional incremental performance gains (I've seen from 5% to 27%). Because performance gains afforded by NOF are not attributable to changes in the search tree, but rather are limited to the efficient elimination of many images that don't fit at each branch; these performance improvement percentages should not compound as puzzle size increases, but should rather be largely independent of puzzle size.

Although the examples shown here, and the polycube puzzle solver software are limited to 3D puzzles on a cubic lattice, FILA and all of it's supporting components have no such constraints and can be used to solve tiling problems in any number of dimensions on any lattice.

December 3, 2018 Edit: I changed the title of this blog entry and edited the above introduction to make it clear that FILA was not limited to 3D puzzles on a cubic lattice.

Continue reading